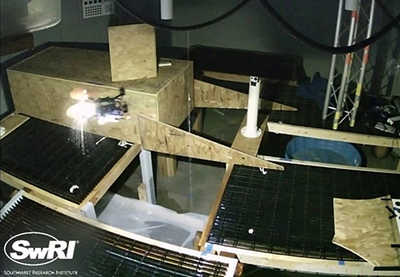

Figure 1: UAS autonomously avoiding an obstacle during a test flight in a dark, GPS-denied environment. The UAS is circled in red.

Figure 1: UAS autonomously avoiding an obstacle during a test flight in a dark, GPS-denied environment. The UAS is circled in red. Background

Autonomous unmanned aerial system (UAS) flight requires three main capabilities: perception, localization, and navigation and control. In other words, the UAS must be able to 1) perceive/understand its environment, 2) determine its position in its environment, and 3) interact with its environment. For fully autonomous flight, the UAS must perform all functions using only on-board computers, which can be severely limited in computational power due to size, weight, and power constraints. During this internal research and development program, the team researched whether algorithms could be developed to operate on the computationally constrained hardware required for UAS operations. Research efforts focused on camera-based perception, navigation and control, Global Positioning System (GPS)-denied localization, and algorithm optimization. Research and development were needed in these areas due to key differences between ground-based autonomous vehicles and small, autonomous UAS.

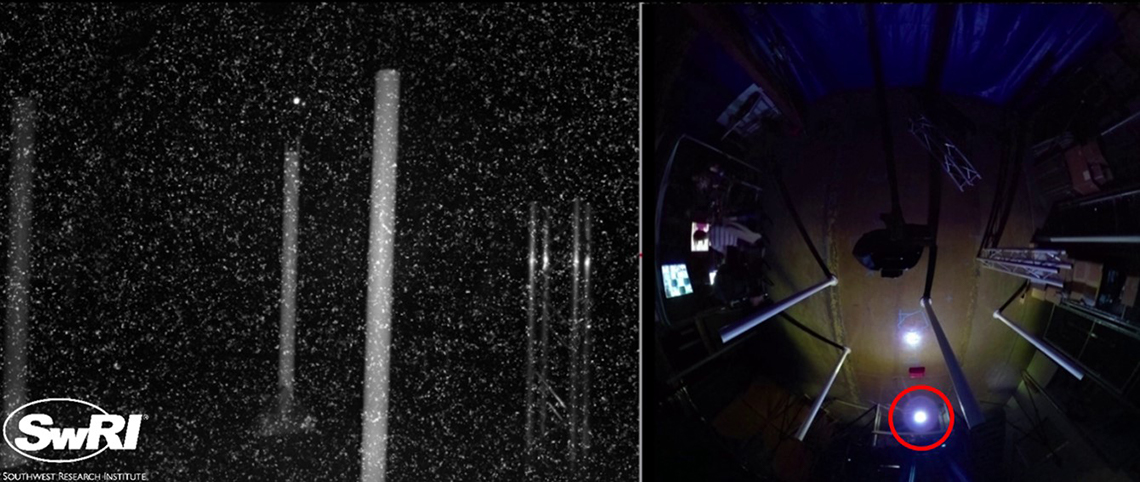

Figure 2: UAS autonomously flying in a confined space containing dripping water.

Figure 2: UAS autonomously flying in a confined space containing dripping water. Approach

The primary objective of this research effort was to determine whether SwRI’s core UAS autonomy technology offerings (i.e., perception, localization, navigation and control) could be optimized on computationally constrained hardware so that we could offer them in future client-funded programs. To accomplish this objective, this effort was split into three phases. Phase 1 focused on building the UAS platforms and developing the individual autonomy components. Phase 2 focused on enhancing system capabilities, integrating core software components, and adapting the system for operation in dripping water. Phase 3 focused on refining and optimizing the core algorithms and developing advanced features.

Figure 3: UAS autonomously flying through an obstacle course in the dark and in the presence of visual noise. Left: UAS camera image. Right: Overhead view of obstacle course. The UAS is circled in red.

Accomplishments

The team developed the UAS hardware and optimized software necessary to autonomously navigate a UAS in a visually degraded, GPS-denied environment. The optimized software included obstacle detection and avoidance, landmark detection, portal detection (and entry/exit), GPS-denied localization, global path planning, local path planning, mission control, and algorithm optimization.