Background

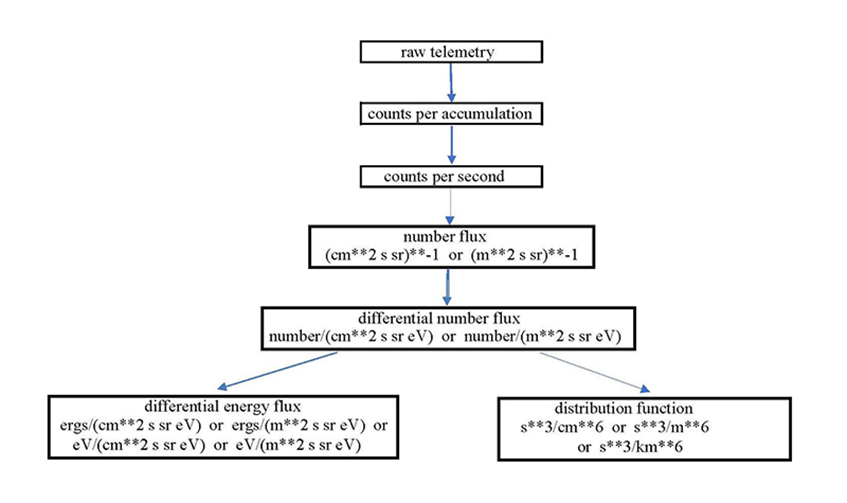

In any scientific endeavor, the manipulation of data in the analysis phase of the study is one of the major steps towards a successful conclusion. Space physics is no exception and in fact may be more dependent on the combinations and correlations of different measured parameters in the analysis phase than other scientific disciplines. To share data, delivery is made to data archive sites in specified file formats and data levels, including higher-level data products that contain one or more sets of physical units that are derived from the raw data (i.e., counts, flux, phase space density). In space physics, these physical units typically build upon each other in a tree-like fashion. For example, for an electron spectrometer, data can be transformed to the units in Figure 1.

Typical production of these data sets revolves around the creation of software that contains the algorithms to process the data to the various data levels. Whenever a calibration factor or the processing algorithm needs to be corrected, this usually results in the reprocessing of all data levels, which can be both time-consuming and expensive.

Figure 1: Data transformation for an electron spectrometer.

Approach

The objective of the research was to explore how scientifically useful physical quantities vital to science analysis can more quickly be developed from the real-time conversion of telemetry and/or derived data without the time-consuming method of reprocessing. The goal of this research was to make the new data transformation environment as simple as possible. Therefore, a generic unit conversion mechanism was developed using the combination of an embeddable scripting language and a free software development tool that connects programs written in a variety of high-level programming languages to create high-level interpreted or compiled programming environments. Within this generic mechanism exists a software library which contains a set of routines that are called by the user’s program to manipulate the data specified in a configuration file and retrieve the algorithm to be executed from a script file so that data in both the Common Data Format (CDF) and the Instrument Data File Set (IDFS) data formats could be accessed and manipulated the same way.

Accomplishments

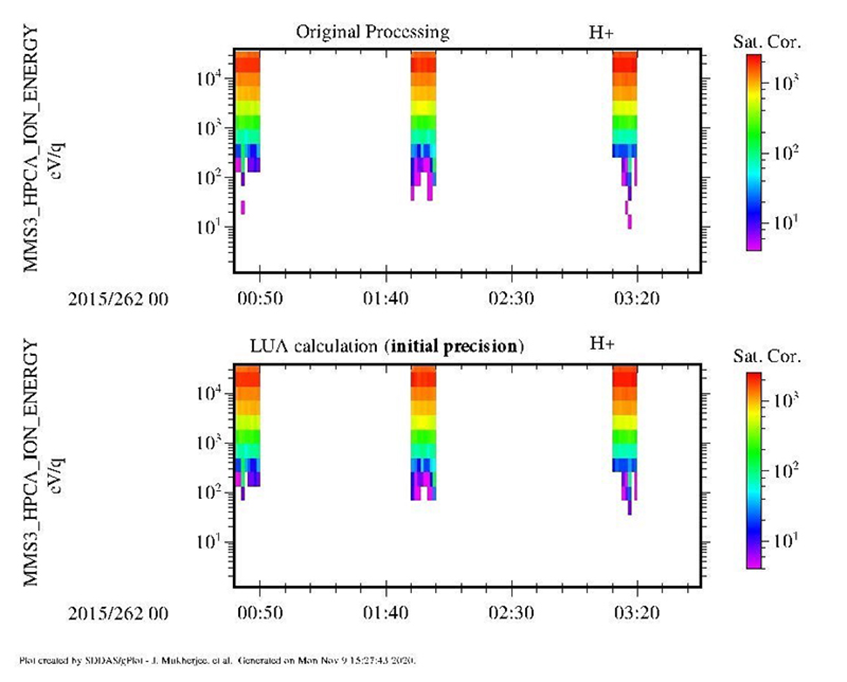

The research that was performed focused on answering the following question: Is it possible to create a generic interface with algorithmic capability that allows real-time derivation of science and engineering measurements? Research efforts supported this premise, demonstrating the capability of making it easy to change or update data to allow for the immediate real-time validation of the data without having to reprocess the entire original data set. This was exhibited by generating a script that was written to read data from an external text file which contained time-dependent multiplier values to correct for saturation. A comparison of the saturation-corrected data generated by the execution of the script was made against the saturation-corrected data contained within the original data set by examining the numerical output of both data sets. Initially, it was determined that the results of applying the same algorithm were similar but not identical, as one can see in Figure 2, which was plotted on a time scale to visually magnify these numerical differences.

Figure 2: Plot of the script-generated data set versus the original data set with initial precision for time-dependent multiplier values.

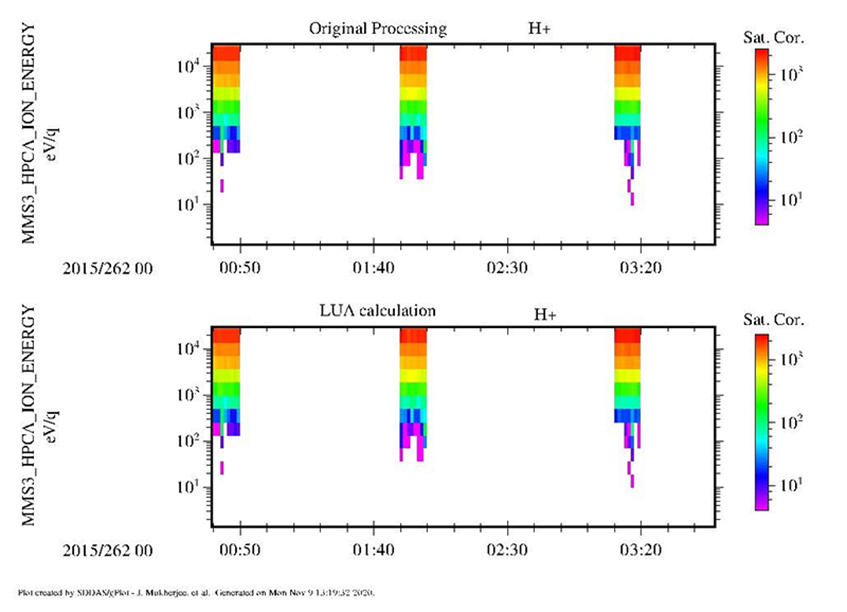

After studying the results, a decision was made to increase the precision of the input data contained within the external text file that was read by the script. Without recompiling any code, the program was rerun, and the script-generated data matched the original saturation-corrected data contained within the data set. This exercise supported the objective of this research — to enable the real-time conversion of data without the need for reprocessing — and the results are shown in Figure 3.

Figure 3: Plot of the script-generated data set versus the original data set with better precision for the time-dependent multiplier values.