We enable automated systems to understand their environments and react when they perceive pedestrians, cyclists, and other dynamic objects. Our solutions integrate Machine Learning Technologies with sensors to advance capabilities in driverless vehicles and unmanned systems using algorithms such as Convolutional Neural Networks (CNN), Resnet50, and Single Shot Detection.

As automation evolves toward lower power and smaller sizes, we help clients optimize perception for a variety of applications and scales with a focus on ensuring that real-time robotic solutions operate within clients’ constraints. We produce full-scene segmentation, classifying every material in a scene, to enable autonomous systems to make informed decisions for navigation and inspection.

Technology Offerings

Object Detection & Environment Classification Algorithms for Ground Vehicles

- >99.95% accuracy

- Operation speed greater than 50 fps (VGA images, 768-core GPU)

- Low-cost camera-based recognition of transitions between structured and unstructured environments

- Operation speed greater than 10 fps for full-scene segmentation (1MP images, 768-core GPU)

- Autonomous path recognition

- Lane detection

- Vulnerable road user detection and tracking from a vehicle (e.g., pedestrians and cyclists)

- Vehicle detection and tracking

- Material classification and segmentation (e.g., paved and unpaved road extraction)

- Gesture recognition

- Daytime and nighttime operation

- Lidar-based perception solutions

Perception-based Inspection Algorithms from Unmanned Systems

- Autonomous inspection of obscure objects (e.g., buildings, bridges, and powerlines)

- Automated defect recognition

- Structured light sensing and perception

- Roof inspection

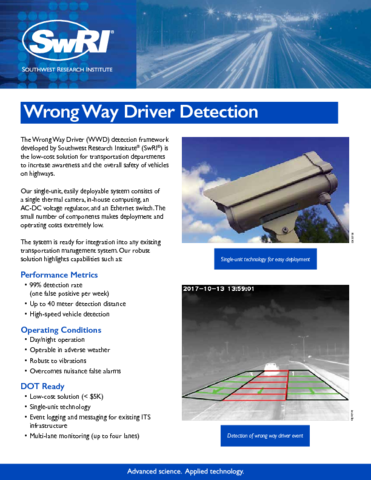

Transportation Infrastructure (Stationary) Object Detection Algorithms

- Wrong-way driver detection

- Vulnerable road user detection and tracking from stationary cameras (e.g., pedestrians and cyclists)

- Traffic and intersection monitoring (Active-Vision Anomaly Detection)

UAV & USV Perception Systems (Air & Sea)

- RGB, longwave (i.e., thermal), midwave, and shortwave perception hardware

- Radar-based perception solutions

- Operation in water and surf environments

- Crop analysis for agriculture

Computational Optimization & Embedded Hardware Implementation

- Limitation analysis of algorithms on desired hardware

- Deep learning algorithms operational on NVIDIA TX-2 (256 GPU Cores)