Background

Figure 1: Robot assembly workcell with a collaborative robot.

Figure 1: Robot assembly workcell with a collaborative robot. Robots have gained widespread use in manufacturing settings but still have significant limitations in assembly tasks where it is often impossible to preprogram robot motions that involve making contact between parts at a high level of precision. For example, an insertion task may require tolerances below 100 micrometers, which may be below the robot’s repeatability. Efforts to address this generally involve using force control to define the robot’s behavior, which allows the robot to complete a desired task by making use of the tactile feedback. Approaches using force control frequently rely on reinforcement learning to generate a force control policy that guides the robot’s actions through the various states of the task. These approaches have been shown to be successful in research environments but are not yet widely deployed in industry production systems. This project aims to provide generic solutions for learning high-precision assembly tasks that may be ready for industry use.

Approach

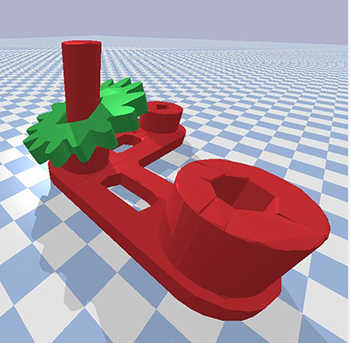

Figure 2: Parts from an example assembly task in simulation.

Figure 2: Parts from an example assembly task in simulation. This project will use a robot executing a force-control policy learned from experience to complete assembly tasks. The use of force control allows a human-like approach to performing assemblies, where the robot responds to the different contact forces and state. The force control policy will be learned using reinforcement learning in both a simulation environment and on physical hardware. Additionally, a high-level decision process will be used to guide the robot’s behavior in various states for each task. This will enable the robot to perform different assembly tasks consisting of parts of different physical scales.

The force control component will also be integrated with broader automated abilities to detect and handle parts. Both 2D color and 3D depth data will be used to identify parts and detect the locations of features important for initiating the force-controlled aspects of the assembly tasks. This will be combined with motion planning algorithms to enable the robot to move parts around autonomously before making contact and executing the assembly tasks.

Accomplishments

The project team has successfully created a simulation environment with realistic contact physics for the robot to learn the behaviors necessary to complete assembly tasks. Initial results have been obtained demonstrating successful learning of a peg-in-hole insertion task in the simulated environment. This learned policy will be executed on a physical robot with an integrated force-torque sensor.