Background

Robots have gained widespread use in manufacturing settings but still have significant limitations in assembly tasks, where it is often impossible to preprogram robot motions that involves close tolerance fits between parts at a high level of precision. For example, an insertion task may require tolerances below 100 micrometers, which may be below the robot’s repeatability. Efforts to address this generally involve using force control to define the robot’s behavior, which allows the robot to complete a desired task by making use of the tactile feedback. Approaches using force control frequently rely on reinforcement learning to generate a force control policy that guides the robot’s actions through the various states of the task. This project goal was to provide generic solutions for learning high-precision assembly tasks for industry use.

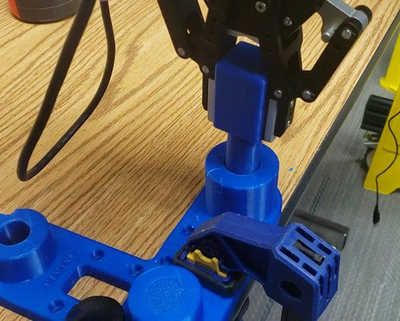

Figure 1: A collaborative robot attempting to insert a peg into a hole.

Figure 1: A collaborative robot attempting to insert a peg into a hole. Approach

This project explored using a robot executing a force-control policy, learned from experience, to complete assembly tasks. The use of force control allows a human-like approach to performing assemblies, where the robot responds to the different contact forces and states. Data used in learning the force control policy, using reinforcement learning, was obtained using a simulation environment and physical hardware. In simulation, the OpenAI gym toolkit was used for application of reinforcement learning, along with TensorFlow as the back end for the deep learning components. The Bullet software library was used for simulating the dynamics of parts in continual contact, with substantial tuning of the parameters to obtain a higher level of fidelity.

On hardware, the team integrated a Universal Robot UR10e into a system driven by ROS-Industrial software to give the robot active force control. This enabled a force control policy to output a desired force and torque direction at a continual fast rate, to drive a part that was being held in the assembled position. The policy’s inputs were the current state of the part being held and the current contact forces, which was the information needed to close the loop for learning, along with a reward function that identified if the part had reached the final assembled configuration.

Accomplishments

The project team successfully created a framework for performing assembly tasks, consisting of a simulation environment with realistic contact physics for the robot to learn, and the software needed to integrate the learned behavior with a physical robot and continue learning with further data collection with the actual physical parts. A key component of this framework was the force-control system that enabled the robot to grasp the part and direct it with an arbitrary force and torque. While a measure of success was achieved in using the reinforcement learning algorithms to reach task success in simulation, the project requires additional work to enable reliable learning of physical assembly tasks. The developed framework will continue to be useful in further exploration of this area and the project team will leverage the framework for future efforts in application of reinforcement learning to this type of problem.