Background

An important process in forage agriculture is the generation of bales for feed production. The practice of bale production involves various machines that include tractors, tedders, rakers, and balers. As part of the baling process, silage material is placed in windrows to drive over with a baler for easy collection into bales. Windrows are the raked rows of silage material that are allowed to dry prior to bale collection. Modern systems are being developed to improve this process through a fully integrated automated baling vehicle. This system will require advanced perception solutions for precision navigation along the windrow to reduce operator fatigue and improve autonomous capabilities.

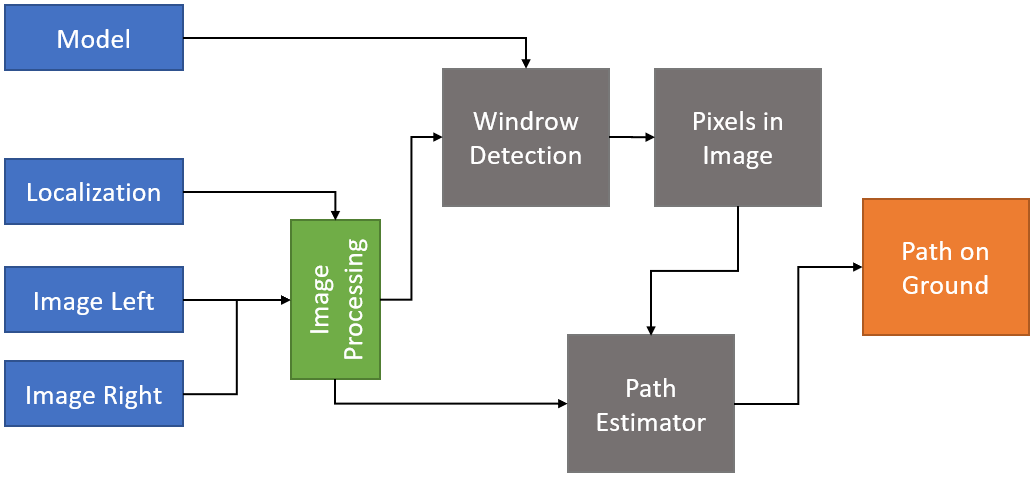

Figure 1: High-level arch

Approach

This research proceeded with incorporating low‐cost sensors into an appliqué system and integrating it onto a vehicle, collecting and labeling data to create a database of ground truth for training and evaluation, and finding a neural network (NN) classification architecture that could predict the center of a windrow and refining it into a splined path.

The team initially designed a prototype perception system made up of two synchronized coplanar cameras with Sony STARVIS™ IMX327 image sensors and onboard embedded processing (Figures 1 and 2). Data was collected on SwRI-owned land and expanded using typical data augmentation techniques such as skews, transforms, and flips as well as unique augmentation methods to adjust the apparent lighting of the images to simulate low‐light operation. The dataset was updated with label information and a total of 1,077 images were labeled: 800 images were for training (~74%) and the remaining 277 images for validation and testing (~26%).

Figure 2: Perception kit

A high‐resolution neural network (HRNet) architecture was chosen to model the center of the windrow given the input data. This architecture uses the labeled coordinate information alongside the sensor data to train a model to detect the windrows. A spline is applied to the detected windrow to estimate the true path on the ground. The resulting system architecture for generating a path based on windrow detection is shown in figure 3.

Accomplishments

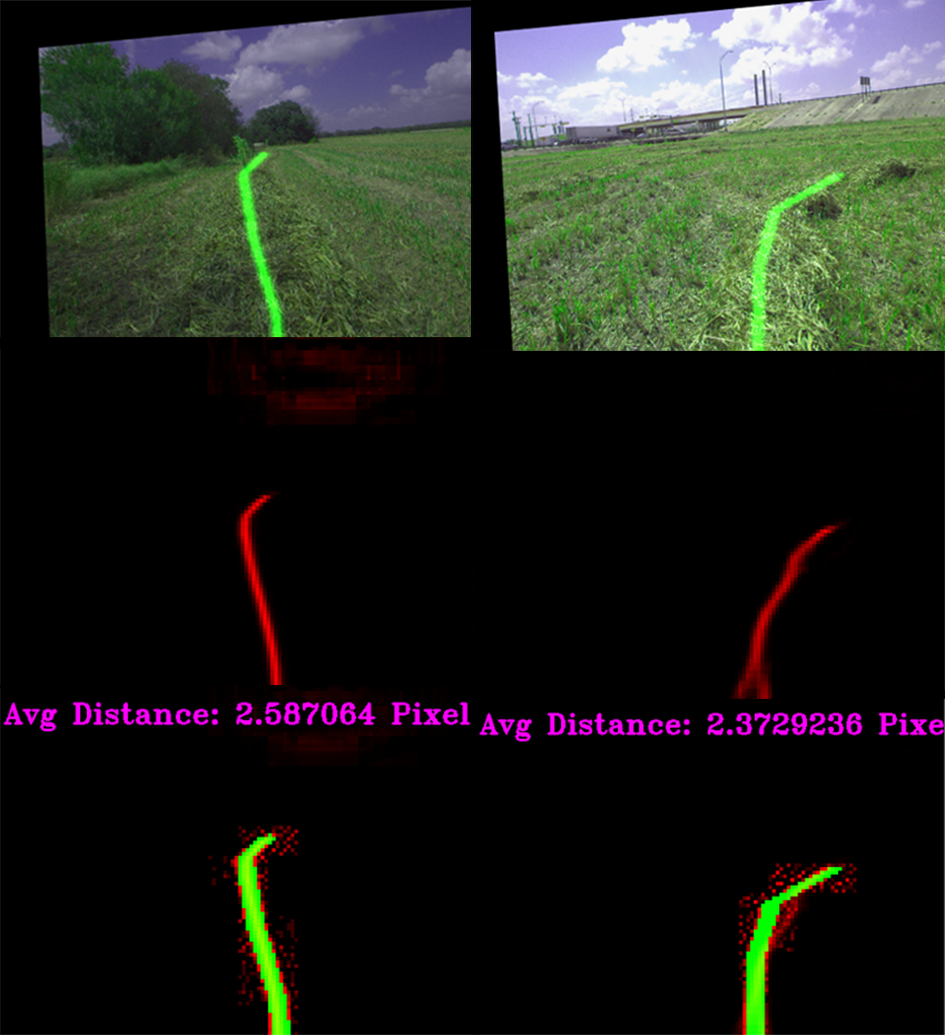

Figure 3: Labeling tool

As a result of this research, we developed a neural network and software architecture capable of detecting windrows in images collected by low‐cost camera hardware to classify a path that can be used for navigation. To measure the validity of the generated path, we calculated the distance variance between the ground truth path label and the predicted label and observed that 96% of validation images resulted in a valid predicted spline, although not all predicted splines met the thresholds for success, as discussed below. This added step increased the time required to label data but provided reasonable results within the necessary time frame.

We also have a dataset of approximately 44,000 images, divided into 32,000 training images and 12,000 validation images. Using a SwRI labeling tool (Figure 3), we annotated dataset of windrows for training and validation data, which was used during tests. We have observed that an increase of 0.1 in the RMSE threshold improves our classification rate significantly to 99.2% positive frames.

Figure 4a-f: Spline output