Space systems provide unique challenges to intelligent robotic motion, including a brutal working environment, lack of connectivity and harsh lighting conditions. Southwest Research Institute is overcoming these challenges by leveraging our experience in computer vision and robotics for industrial automation.

This article is part of an occasional series about developing the next generation of robotics for in-space servicing, assembly and manufacturing (ISAM); rendezvous, proximity, operations and capture (RPOC); and commercial applications. In 2023, SwRI launched its Maturing Adaptable Space Technologies (MAST) initiative. This article summarizes recent MAST research and includes a Q&A-style FAQ about navigating key challenges of robotics in space. Future articles will discuss our research and ISAM topics in more technical detail.

MAST helps to advance technology for characterizing resident space objects, on-orbit-fueling and other ISAM areas. We focused on two areas – camera-based vision systems and dynamic path planning. SwRI developed a robotics simulation package using Drake, a simulation tool for robotics, that employs industrial manufacturing best practices for applications in space. We also investigated challenges of object identification, trajectory tracking and dynamic motion planning on space-rated systems.

Planning Robotic Motion for In-Space Manufacturing

We set out to simulate, plan and command motions of a robotic arm in microgravity that minimize momentum imparted to the satellite base. We used a Python software package, based on Drake, that models the dynamics of robotic manipulators maneuvering in space without gravity. This software package can plan several types of motion trajectories, measure the momentum generated by those motions, and optimize those motions to reduce the generated momentum below a target threshold. This package will be used for future hardware-in-the-loop testing featuring an industrial robotic arm on an air-bearing table in SwRI’s new Space Robotics Center.

SwRI’s Space Robotics Center features an air-bearing table to simulate low-gravity conditions, allowing engineers to test robot applications for future deployment in zero gravity.

Developing Algorithms for FPGA Computing in Space

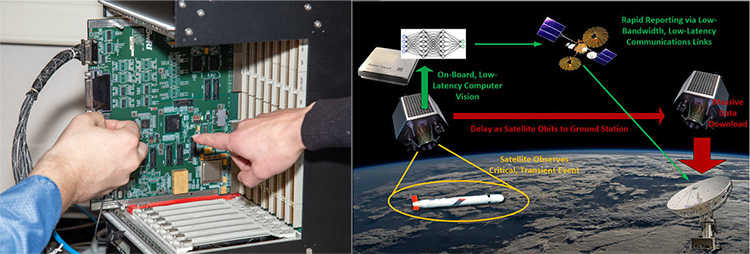

Using machine learning algorithms and artificial intelligence to identify objects requires substantial computer memory and power that are not typically available on most space-ready computers. Object detection will be a key function for future in-space manufacturing and assembly tasks. To automate these tasks, spacecraft will require more agile computes. SwRI designed machine vision algorithms to run on a space-ready field programmable gate array (FPGA). Machine learning algorithms powered by a deep neural network (DNN) require more computational power than is available on conventional spacecraft computers. Additionally, SwRI investigated new camera vision tools for exploration of Earth caves, which can help to inform future exploration of lunar caves and operations in confined areas.

Why is space a challenging environment?

The extreme environment of space limits the sophistication and power of the computers that operate there. Temperatures in low Earth orbit can range from -65°C to +125°C, and the vacuum of space makes cooling these systems challenging. The power used to run computers is limited by the capabilities of solar arrays. Radiation continuously bombards electronics, causing corruption of data or even permanent damage to digital chips. System weight is at a premium too, with every pound of earth-orbit shipping costing over $10,000. To address the challenges of the environment, computational hardware deployed to space generally lags far behind the capabilities of familiar consumer electronics. Computing components must be rigorously tested to evaluate their tolerance to radiation, and newer transistor technologies may fail completely. Compute rates must be limited to reduce power consumption and manage the temperature of the spacecraft. Generating packages and software for such systems requires a knowledge of the lower-level programming languages and hardware that’s rated for space use.

Many space systems lack continuous network connectivity. Teleoperation of systems is not always possible, nor is it real-time interaction. Increased autonomy of these systems reduces the need for dependence on teleoperation, effectively increasing efficiency. Increased autonomy is also expected to improve return on investment of these expensive systems. System lifespan is increased by enabling better contingency handling. Automating intelligent usage of resources including, fuel for motion execution and computer memory for vision information processing, also extends mission life and enables more years of revenue generation.

How do we use machine vision in space to find objects of interest?

An important enabler for autonomy in robotic space systems is the capability for spacecraft to recognize and locate other spacecraft and understand the shape and orientation of that satellite. Seeing a satellite against a sea of stars isn’t so difficult if you’re a human up-close – but cameras need to find edges, patterns and features to characterize as a satellite to identify it. This problem becomes more difficult if the object you’re tracking is moving across the backdrop of the earth or is highly reflective– it’s a much noisier backdrop than the vast darkness of space. Going from observing a satellite in the shadow of a planet against the backdrop of space, where the facets and features of the satellite are easy to parse from the blackness, to observing a satellite directly in front of the sun, where its reflective surface is blending in with the hottest, brightest object the naked eye can see today, is no small feat for a computer vision system.

SwRI developed feature-tracking algorithms for deployment on FPGA computers. The algorithms can reconstruct the general geometry of a resident space object (RSO) from simulated camera data and accurately assess its rotation.

SwRI engineers are employing advanced perception algorithms to identify satellites from this difficult data and doing so on space-rated processors. This includes the development of more hardware-in-the-loop data, taking photos of real objects in simulated environments, including darkness with stars and a singular powerful light source, and using that data to test a wide variety of algorithms. These algorithms are being tested for robustness of their capabilities against the data and their computational expense, keeping in mind our algorithms must be constrained-hardware-friendly.

Left: Engineers point to a field programmable gate array (FPGA) on a space-ready computer. Right: An illustration depicting how an FPGA onboard a satellite can process a machine learning algorithm using low-precision mathematics.

How do manufacturing practices have value in space?

Robotics have been used terrestrially for years in servicing, assembly, and manufacturing activities such as fabrication and assembly of large structures repeatably. The algorithms and logic used to make those processes safe and precise are being implemented by SwRI engineers for use on robotic manipulators in space. The next step to robust automation in space is leveraging industrial best practices to make robotic systems respond based on their environment, sensor input data, and mission. The same techniques we use to, for example, create smooth, fluid movements to weld the frame of a car can enable clean intercept of objects, debris, and maintenance work on a structure.

We are currently refining these techniques for use in microgravity through high-fidelity simulations. Our simulations thus far have included 7 DOF arms in microgravity and fixed reference frames where we can closely monitor and test the inertial effects of planned motions in joint and cartesian space. This includes scenarios where these arms are moving to track and then intercept objects and we have promising progress on algorithms that autonomously select when to pursue near-flying objects.

In summary, SwRI is working to develop the next generation of object identification, trajectory tracking, and dynamic motion planning algorithms and software for the space-rated systems of tomorrow. SwRI engineers are leveraging best practices and cutting-edge techniques from terrestrial machine vision and manufacturing automation for development of robust and self-sustaining space systems.

For more information, contact Meera Towler or visit Space Robotics Engineering.