Background

Southwest Research Institute (SwRI) has developed a wide range of traffic management solutions for state and local governments across the country. However, these systems require training and experience to operate with maximum efficacy. The recent advent of large language models (LLMs) has provided a means of addressing this issue. Capable of interpreting human language, these systems can provide a new and natural means of interacting with complex programs.

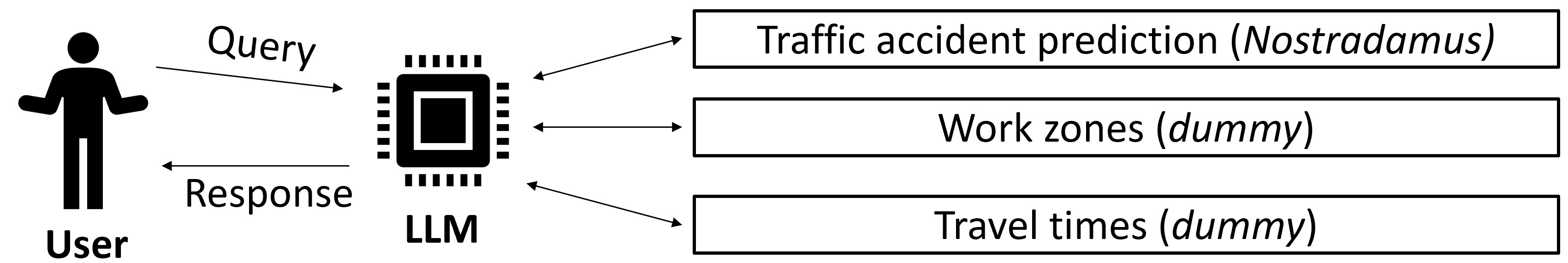

Figure 1: System workflow.

Approach

We sought to determine how LLMs can serve as natural language interfaces to multiple underlying traffic management subsystems. To do so, we leveraged the LangChain framework to connect the various components of our approach: a basic web-based user interface, the LLM itself, and three traffic-related subsystems. One of these subsystems – a traffic accident prediction model termed “Nostradamus” – is real and derived from a previous internal research project. The other two – dealing with work zones and travel times – are modelled after real data but are ultimately “dummy” interfaces built to demonstrate the ability of an LLM to distinguish between similar and interrelated queries. These tools were connected to the LLM via the LangChain framework. Detailed textual descriptions of each tool, along with their expected inputs and outputs, were provided to the model.

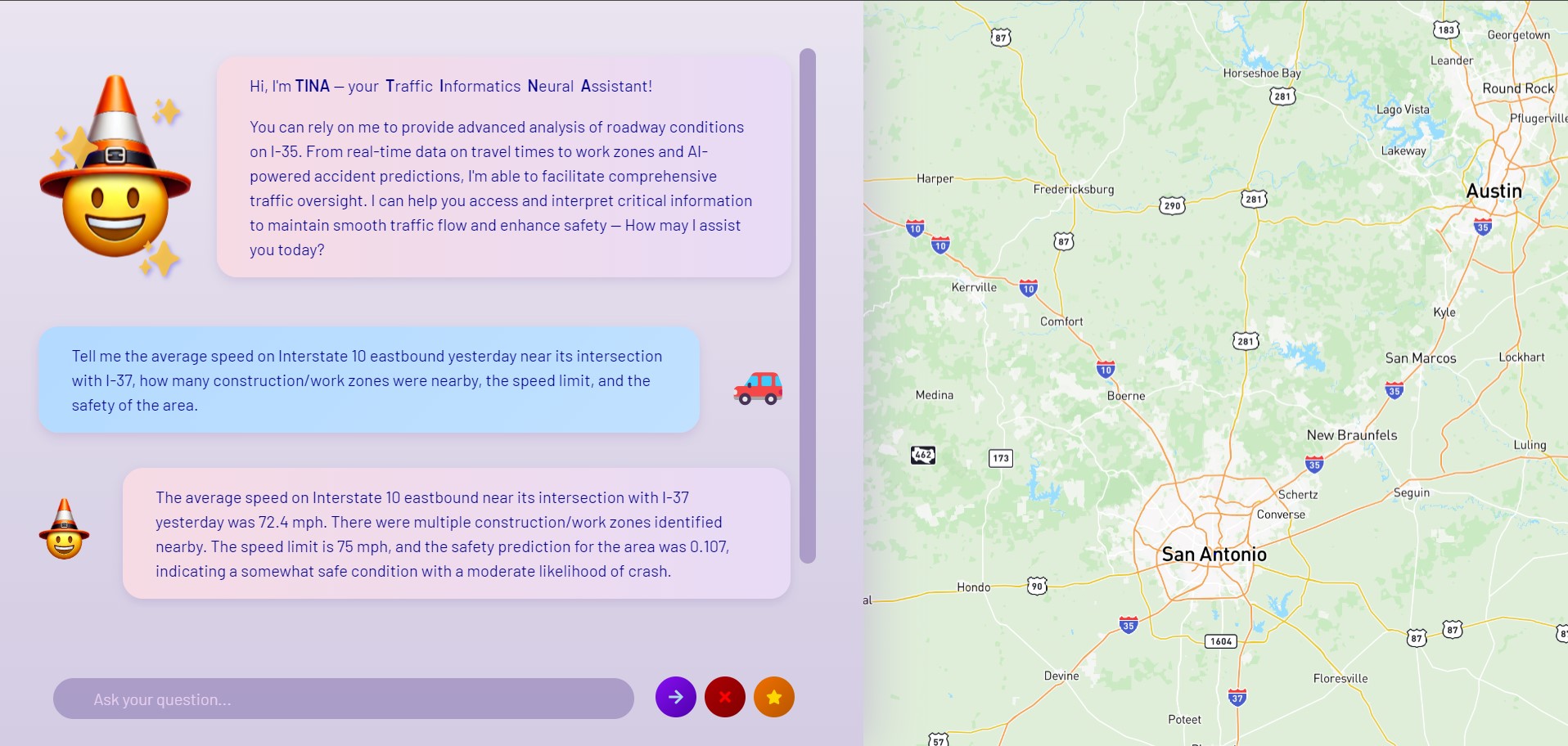

Figure 2: An example interaction with the model.

Accomplishments

We found that LLMs, when integrated into a framework such as LangChain, can provide powerful and accurate natural-language interfaces to underlying tools. These LLMs can successfully distinguish between complicated, multi-part user queries, and appropriately combine, interpret, and format the responses. As the LLMs are informed by tool output, they were “hallucination” free throughout every test (a “hallucination” is a factually incorrect model generation). The end-to-end response time averaged 6.3 seconds, although this varied depending on the complexity of the query. The tool-calling appropriateness was approximately 90.3%; however, as many “misses” were caused by the lack of a tool specifically aligned with the request, we anticipate this improving with increased tool availability. Overall, we expect the developed LLM pipeline to be applicable not only to transportation, but to practically any application interaction as well; this promises to enhance efficiency, simply and accelerate complex operations, and, in the case of traffic management, alleviate operator burden.