Background

This project investigated the integration of ChatGPT-4o with a Scan-N-Plan robotic system to streamline task automation. Scan-N-Plan—an open-source Robot Operating System (ROS)-based framework for operating manufacturing robots in dynamic environments—traditionally relies on users to write software and/or complex configuration files to define the static workflow of tasks needed for a specific application. The objective of this project was to transition from this fixed user-specified task definition to a system that could automatically compose tasks based solely on high-level user instruction.

Approach

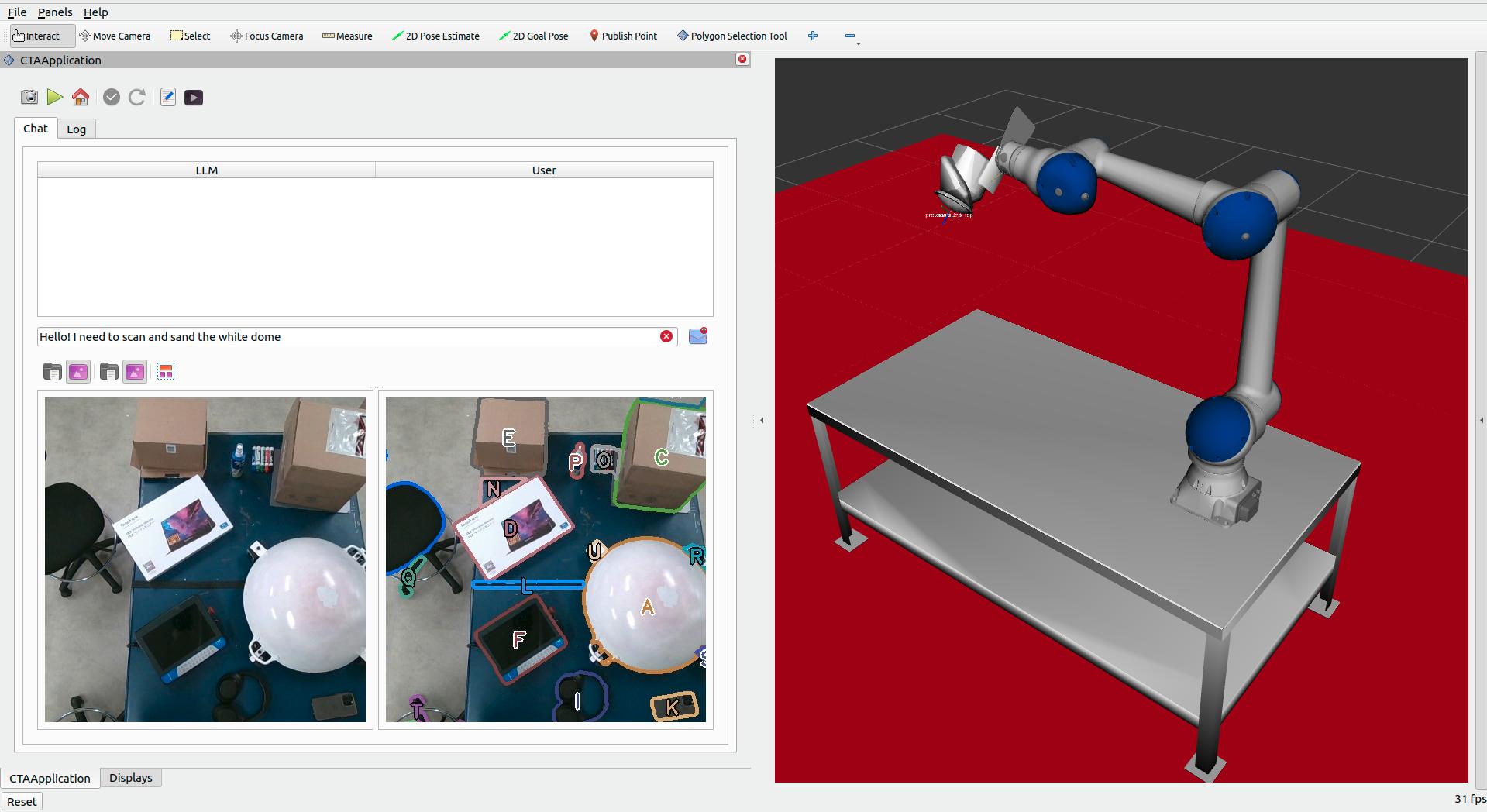

We designed system prompts to guide a large language model (LLM) in interpreting user commands and managing the functionality of a robotic system. We then used the robot to capture an image of its workspace and used the Segment Anything Model (SAM) to segment unique objects in the image, enabling the LLM to associate user text prompts with individual objects seen by the robot. Finally, we prompted the LLM to generate, display, and execute workflows to carry out specific tasks using the robotic system given these segmented images and high-level textual instructions.

Figure 1: Scan-N-Plan user interface with LLM integration.

Accomplishments

Using a segmented image of the workspace of the robot and a single short and conversational sentence, we were able to prompt an LLM to generate and execute a valid workflow (in the form of a behavior tree in XML format) for scanning and sanding an arbitrary part using a robot in our lab. We accomplished this goal and demonstrated the execution of sanding workflows with hardware-in-the-loop for two different test parts. We also successfully prompted the LLM to make modifications to proposed workflows, such as the addition and reorganization of tasks, to accommodate application-specific user requirements.

Figure 2: Image of an example robot workspace, segmented with SAM and labeled for contextual reference for LLM.