Background

This project researched the viability of creating a 3D barbell tracking system using a single camera by using feature matching to match opportunistic features present on the weight plates. In the high-performance space, the Olympic lifts are used foundationally in training as a method to improve explosive force production in athletes. Accurate tracking of the bar in the 3D space gives instructors/coaches the ability to objectively describe ways to improve training techniques, as evidenced by studies that show the objective data collected from motion capture systems leads to better outcomes in strength training.

Approach

Figure 1: Barbell Path Reprojected onto frame displaying peak and instantaneous velocities.

This method tracked and reprojected the location of the barbell back onto the captured video by determining a unique set of features on a template image and each frame of the target video. We used the detected features to get a perspective transform between the two images. After the transform was found, the inliers calculated were used to solve the Perspective-n-Point (PnP) problem to project the position of the bar in 3D. We implemented a separate tracking system, using AprilTag, fiducial markers that can be place on the weight plate for plates that do not have enough natural features.

Accomplishments

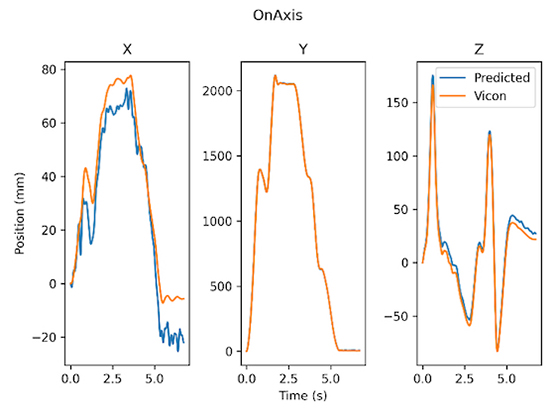

We were successful in using opportunistic features and AprilTags to track the position of the barbell in a frame. To validate the accuracy of the barbell tracking system, the barbell path measurements computed using our method were compared against the industry standard measurement system. Root mean squared errors (RMSE) were calculated for each coordinate direction as well as for the absolute positional error. The system tracks the plate with an average RMSE of less than 1 cm in the y and z directions for on-axis (perpendicular to the frame of motion) and off-axis captures with a computation time of ~21ms per frame (50.4 seconds for a 10 second video). The x axis is the least accurate, as we have the least amount of information in this direction with a RMSE of over 1 cm, but less than 2 cm for both types of capture.

Figure 2: The Position RMSE compared with the data from Industry Standard Cameras.