Background

Robots are well established tools in industry because of their speed, strength, and repeatability, among many other reasons. However, many tasks are not suitable for full automation. These often require decision making or dexterity that is too challenging for a robot. One solution is to automate part of the process, while a human operator performs the challenging steps.

Traditional industrial robots are extremely dangerous for people to be around because of their high speeds and payloads. Collaborative robots (or cobots) have emerged to fill the need for a robot to safely work alongside a human. These cobots are built in accordance with safety standards, are limited in speed and payload, and have built in sensors to stop motion when impeded. They are growing in prevalence and have the potential to greatly improve productivity by leveraging the differing skills of humans and robots.

However, collaborative robots do not respond to humans in their environments proactively. Cobots wait for their motion to be impeded to go into a protective stop. This is both a safety concern (due to the gray area of non-collaborative end-effectors) and a productivity issue. At minimum, a protective stop brings a process step to a temporary halt until the robot can be reset. At the other end of the spectrum, a protective stop can cause an indefinite stop to the process until a corrective action plan is implemented.

Approach

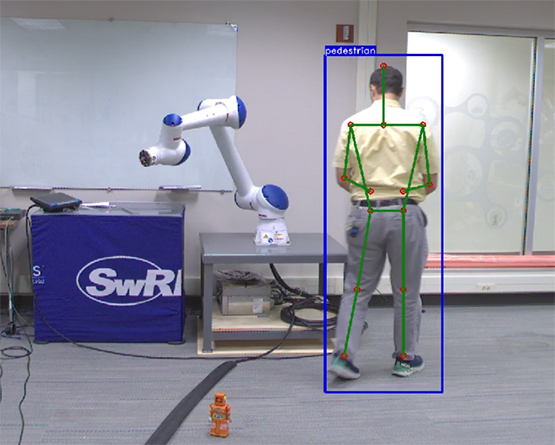

This project’s goal is to make robots more intelligent collaborators with people by enabling them to anticipate a collision with a person, and proactively change its motion to avoid the person while still completing its task. We are leveraging the markerless motion capture (MaMoCap) system that has been developed by Divisions 10 and 18 to track where the person is accurately and easily. Significant changes to the MaMoCap system that this project has made include network optimization to enable real-time operation on live data, and developing an open-source robotic software interface for the output data.

The other core element to this project involves using this motion capture data intelligently. We are improving and adding functionality to SwRI developed real-time robot motion planners. These include a method of integrating human pose data into the collision environment, new solver constraints, and a method for smoothly executing the changing trajectories.

Accomplishments

The result of this project will be a robot motion planning system that can actively avoid a person moving in the environment. Functionality will be demonstrated in a representative workcell using a collaborative robot.

This demonstration workcell will showcase the new real-time capabilities of the markerless motion capture system, integrated with the real-time robot motion planning and execution systems. It will serve as a foundation for future research and a proof of concept to our industrial partners.

Figure 1: Markerless Motion Capture results shown while a person shares a workcell with a robot.